Hierarchical Clustering: Types, Linkage Methods, How It Works & Applications

Published: 10 May 2025

Hierarchical Cluster Analysis

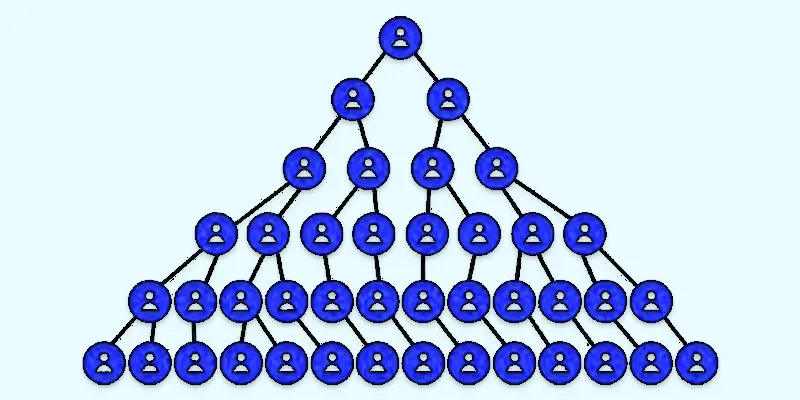

One method for gradually assembling related data points into clusters is Hierarchical Cluster Analysis (HCA). It produces a tree-like structure called a dendrogram, which illustrates the connections between data points. This method is useful in many fields, like marketing, biology, and image processing. In contrast to other clustering methods, HCA does not need us to predetermine the number of clusters. It’s an excellent tool for meaningfully and clearly organizing data!

Hierarchical Cluster Analysis: What is it?

A technique for assembling related data points into clusters according to their commonalities is called hierarchical cluster analysis, or HCA. It produces a dendrogram, a tree-like structure that illustrates how groups break or merge at various levels. In tasks involving data analysis, pattern recognition, and classification, where the number of clusters is unknown beforehand, HCA is primarily utilized.

Hierarchical Clustering Types

- Agglomerative Hierarchical Clustering from the Bottom Up

- Divisive Hierarchical Clustering Method from the Top Down

Agglomerative Hierarchical Clustering from the Bottom Up

Agglomerative hierarchical clustering is a bottom-up technique that starts with each data point as a separate cluster. The nearest clusters are progressively combined according to how similar they are. Until every point forms a single, sizable cluster, this process is repeated. It is the most commonly used hierarchical clustering method because it is simple and easy to understand.

Divisive Hierarchical Clustering Method from the Top Down

A top-down method of clustering is Divisive Hierarchical Clustering. All of the data points are initially grouped together in a single, enormous cluster, and they are gradually divided into smaller groups. Until every data point forms its own distinct cluster, the process is repeated. Because it is more complicated and involves more computations, this approach is less popular, although it may be helpful for some kinds of data analysis.

How Hierarchical Clustering Works (Step-by-Step Guide)

Hierarchical clustering groups similar data step by step, forming a tree-like structure called a dendrogram.

Let’s start with individual data points

- Every data point is handled as though it were a distinct cluster.

Measure the Distance Between Points

- To determine how close the points are to one another, use a distance metric (such as the Euclidean distance).

Merge the Closest Points

- A new cluster is created by combining the two data points or clusters that are the most similar.

Repeat the procedure

- Step by step, keep combining the closest clusters until every point forms a single, sizable cluster.

Make a Dendrogram

- A dendrogram (tree diagram) is drawn to show how clusters are formed at each step.

Choose how many clusters to use

- To obtain the required number of clusters, cut the dendrogram at a specific level.

Techniques for Linking in Hierarchical Clustering

Linkage methods define how the distance between clusters is computed when they are joined. There are four primary categories

Just One Linkage

- determines how close two clusters are to one another.

- able to create lengthy clusters that resemble chains.

Full Connection

- determines how far apart two clusters are.

- produces clusters that are uniformly sized and compact.

Average Linkage

- determines the mean separation between every point in two groups.

- strikes a balance between full and single connection.

Linkage of Centroids

- Uses the center point (centroid) of each cluster to measure distance.

- Works well with spherical clusters.

The Benefits and Drawbacks of HCA, or Hierarchical Cluster Analysis

There are advantages and disadvantages to Hierarchical Cluster Analysis (HCA). Although it aids in efficient data organization, it might not always be the ideal option for big datasets.

Advantages

- The number of clusters does not need to be predetermined.

- Creates a clear dendrogram for visualization

- Works well for small datasets

- Supports different distance metrics and linkage methods

Disadvantages

- Slow for large datasets

- Sensitive to noise and outliers

- Difficult to determine the best number of clusters

- Merging in agglomerative clustering is irreversible

Applications of Hierarchical Clustering in Real Life

In many different fields, hierarchical clustering is used extensively to group related data and identify trends. It helps in organizing information, making predictions, and improving decision-making.

Real-Life Applications

- Marketing Segmentation – Groups customers based on purchasing behavior.

- Biological Classification – Organizes species into hierarchical categories.

- Image Segmentation – Helps in object detection and facial recognition.

- Anomaly Detection – Identifies fraud in banking and cybersecurity.

- Medical Diagnosis – Classifies diseases based on patient symptoms.

- Social Network Analysis – Finds communities and relationships between users.

- Document Clustering – Groups similar articles or research papers.

- Genetics and DNA Sequencing – Helps in understanding gene similarities.

- Retail and Recommendation Systems – Clusters products for personalized recommendations.

Tips for Beginners Using Hierarchical Clustering

- Understand the Data First – Analyze your dataset and clean any missing or incorrect values.

- Choose the Right Distance Metric – Use Euclidean distance for numerical data and other metrics for text or categorical data.

- Select an Appropriate Linkage Method – Try single, complete, average, or centroid linkage based on your clustering needs.

- Use a Dendrogram for Better Insights – A dendrogram helps visualize clusters and decide how many to keep.

- Be Careful with Large Datasets – HCA is slow for big data; consider sampling or other clustering methods.

- Handle Outliers Properly – Outliers can affect clustering results, so remove or analyze them separately.

- Test Different Parameters – Experiment with different linkage and distance settings to get the best results.

- Use Clustering Validation – Check the Silhouette Score or Dunn Index to measure clustering quality.

Conclusion About Cluster Analysis Hierarchical

We’ve covered hierarchical cluster analysis in detail. It is a powerful technique for grouping similar data and understanding patterns. If you’re a beginner, start with small datasets and experiment with different linkage methods to see what works best. I highly recommend using a dendrogram to visualize clusters and make informed decisions. Try applying hierarchical clustering to your own data and see how it helps—let me know your thoughts in the comments!

FAQS Hierarchical Clustering

One technique for unsupervised learning is hierarchical clustering. It groups comparable data items without prior labels. This means the algorithm finds patterns without human intervention.

For tiny datasets that require a distinct visual structure (dendrogram), use hierarchical clustering. If you know how many clusters there are in a larger dataset, go with K-means. While hierarchical clustering offers more precise grouping, K-means is quicker.

Hierarchical analysis is a step-by-step approach to breaking down complex data into smaller, structured groups. It is used in clustering, decision-making, and classification tasks. This approach facilitates the comprehension of data linkages.

The four common types of cluster analysis are:

- Hierarchical Clustering: Creates a dendrogram, or tree-like structure.

- Data is divided into predetermined clusters using partitioning clustering (e.g., K-means).

- Clustering based on density, such as DBSCAN use data density to identify clusters.

- Model-Based Clustering – Uses probability models to group data.

- Hard Clusters – Every data point is a member of a single cluster (e.g., hierarchical clustering, K-means).

- Soft Clusters (Fuzzy Clusters) – A data point can belong to multiple clusters with different probabilities (e.g., Fuzzy C-means).

A tree-like figure that illustrates the merging or splitting of groups is called a dendrogram. By pruning the tree at a specific level, it aids in determining the ideal amount of clusters. In hierarchical clustering, it is an essential component.

An example is biological classification, where animals and plants are grouped based on similarities. Another example is customer segmentation, where businesses group customers based on purchase behaviour. It is also used in medical diagnosis for grouping diseases.

Data is grouped into fixed clusters without a hierarchy using flat clustering (e.g., K-means). Clusters can be split up or combined in the tree-like structure created by hierarchical clustering. Flat clustering is quicker, while hierarchical clustering offers a more thorough explanation.

- Be Respectful

- Stay Relevant

- Stay Positive

- True Feedback

- Encourage Discussion

- Avoid Spamming

- No Fake News

- Don't Copy-Paste

- No Personal Attacks

- Be Respectful

- Stay Relevant

- Stay Positive

- True Feedback

- Encourage Discussion

- Avoid Spamming

- No Fake News

- Don't Copy-Paste

- No Personal Attacks